As engineering teams scale, Continuous Integration (CI) pipelines often become a bottleneck. The feedback loop slows down, and compute costs rise linearly with the number of jobs. This challenge is particularly acute in monorepos, where a single commit often triggers a matrix of linting, testing, and analysis jobs across multiple packages. A common denominator I’ve noticed in many slower workflows is often either the redundancy of build steps across parallel jobs or the lack of parallel jobs to avoid redundant build steps.

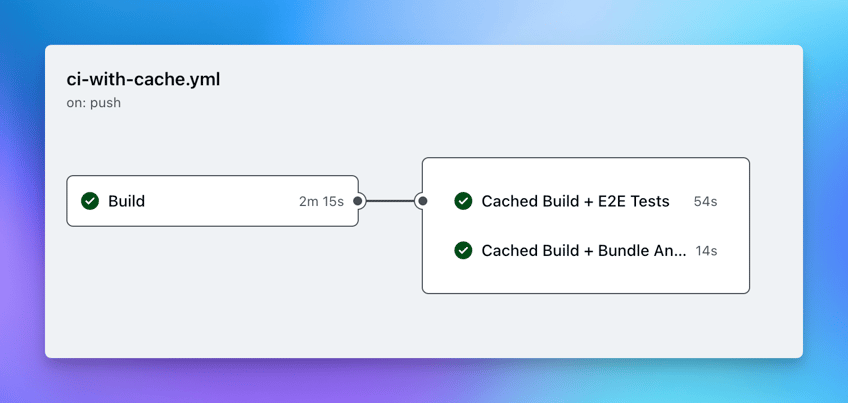

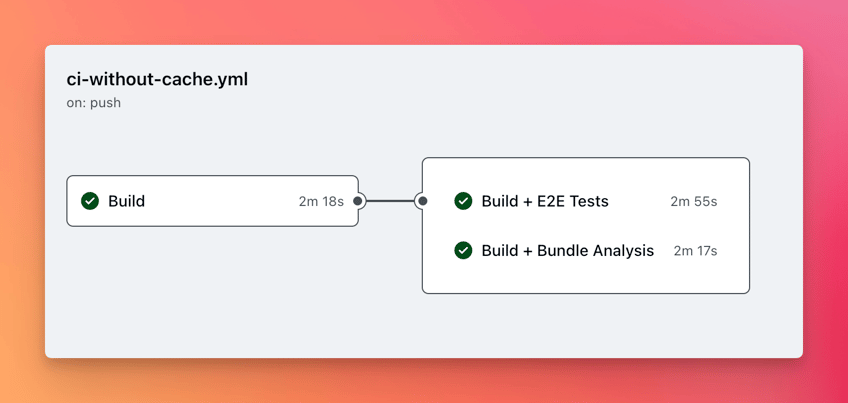

Here’s an example of two workflows that demonstrate the impact of sharing build artifacts between independent jobs.

In a distributed workflow, you might have separate jobs for Unit Tests, E2E Tests, and Bundle Analysis. If these jobs run in parallel, they typically function as isolated environments. While modern package managers (like pnpm or npm) have excellent mechanisms for caching downloaded dependencies based on lockfiles, they don’t solve the redundancy of the build process.

If three parallel jobs need the compiled application to run their tests, they often end up running the build script three times. This redundancy introduces:

- Wasted compute: compiling the same TypeScript/codebase multiple times.

- Increased cost: linearly increasing billable minutes for CPU-intensive tasks.

- Non-determinism: slight timing or environment differences could theoretically yield different build outputs.

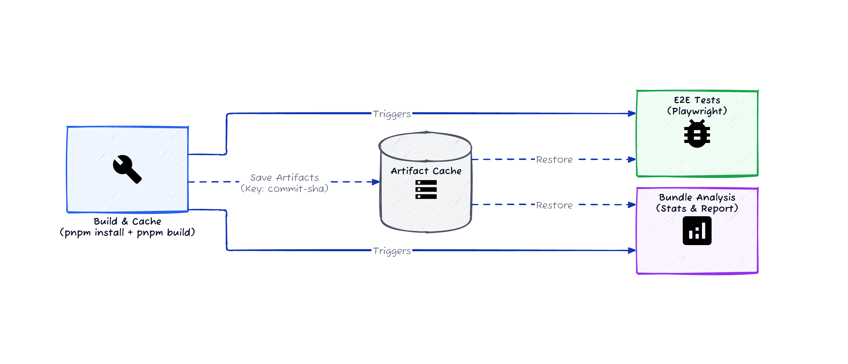

The architectural approach: Build once, consume everywhere

The optimal architecture treats the build process as a distinct, upstream producer. We build the application once, package the generated artifacts (e.g., dist/, .next/, build/), and distribute them to downstream consumer jobs.

While standard dependency caching relies on pnpm-lock.yaml (which rarely changes), artifact caching needs to be strictly tied to the source code state. By using the specific git commit SHA as our cache key, we can safely share build outputs between jobs running on the same commit.

A contrived example

To demonstrate this, I set up a pnpm monorepo containing a Vite+React application and a Playwright test suite. The goal is to build in one job and test in another, without rebuilding.

Here’s the link to the repo: https://github.com/Thinkmill/demo-actions-cache

Step 1: The producer (Build job)

The Build job is responsible for the heavy lifting: installing dependencies and compiling the application.

We use actions/cache with a commit-specific key (${{ github.sha }}). This is the critical distinction from dependency caching. We aren’t just caching what we downloaded; we are caching what we generated.

# Build Job configuration

- name: Cache Build Artifacts

uses: actions/cache@v3

with:

# We cache the build output (dist)

# Dependencies are automatically cached using pnpm store cache

path: |

apps/example-react-app/dist

node_modules

# The commit SHA ensures a unique cache entry for this run

key: ${{ runner.os }}-build-${{ github.sha }}Step 2: The consumers (Test & Analysis jobs)

Downstream jobs declare a needs: build dependency. They don’t need to run pnpm install or pnpm build. They simply restore the "hydrated" workspace state—containing both the compiled artifacts and the runtime dependencies—and start testing immediately.

# E2E Tests Job configuration

needs: build

steps:

- uses: actions/checkout@v3

- name: Restore Build Artifacts

uses: actions/cache@v3

with:

path: |

apps/example-react-app/dist

node_modules

# Matching the key from the build job

key: ${{ runner.os }}-build-${{ github.sha }}

# Fail fast if the cache is missing, indicating an upstream failure

fail-on-cache-miss: true

- name: Run Playwright

# Direct execution of tests without build overhead

run: pnpm exec playwright testUsing fail-on-cache-miss: true is a good practice here. It ensures that if the artifact transfer fails, the job fails immediately rather than attempting to run tests against a non-existent build, providing clearer failure signals.

Wrapping up

Adopting this "Build Once, Consume Everywhere" pattern yields immediate benefits:

- Cost savings: eliminating redundant build minutes directly reduces the billable time on GitHub Actions.

- Determinism: every test job runs against the exact same binary artifacts, eliminating "flaky build" issues between parallel jobs.

- Pipeline clarity: CI job logs are cleaner. Test jobs show only test output, making debugging significantly easier.

Building a faster CI pipeline is an iterative process and architectural changes like artifact caching often have highest impact on CI time and expense. By decoupling the build process from verification steps, you create a pipeline that is both faster and more reliable. We have seen upwards of 40% reduction in CI minutes in our client projects by sharing build artifacts between independent jobs, amongst other improvements.

For those looking to implement this in their own monorepos, the pattern is highly adaptable. I encourage you to audit your current workflows for redundant build steps, as it is often the lowest hanging fruit for CI optimisation.